OpenCV取消投影2D指向深度为Z的3D

问题陈述

假设我知道每个点的距离,我正在尝试将2D点重新投影到其原始3D坐标。在OpenCV documentation之后,我设法使其在零失真下工作。但是,当出现扭曲时,结果将不正确。

当前方法

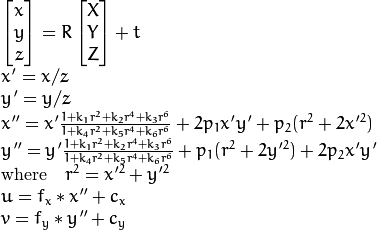

因此,我们的想法是扭转以下情况:

分为以下内容:

通过:

- 使用

cv::undistortPoints消除任何失真 - 使用内在函数通过逆转上面的第二个方程来返回归一化的相机坐标

- 乘以

z可逆归一化。

问题

-

为什么我需要减去这是我的错误-我弄乱了索引。f_x和f_y才能返回标准化的相机坐标(在测试时凭经验找到)?在下面的代码中,在第2步中,如果我不减去-即使未失真的结果也关闭了 - 如果我包含失真,结果将是错误的-我在做什么错了?

示例代码(C ++)

#include <iostream>

#include <opencv2/calib3d/calib3d.hpp>

#include <opencv2/core/core.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <vector>

std::vector<cv::Point2d> Project(const std::vector<cv::Point3d>& points,

const cv::Mat& intrinsic,

const cv::Mat& distortion) {

std::vector<cv::Point2d> result;

if (!points.empty()) {

cv::projectPoints(points, cv::Mat(3, 1, CV_64F, cvScalar(0.)),

cv::Mat(3, 1, CV_64F, cvScalar(0.)), intrinsic,

distortion, result);

}

return result;

}

std::vector<cv::Point3d> Unproject(const std::vector<cv::Point2d>& points,

const std::vector<double>& Z,

const cv::Mat& intrinsic,

const cv::Mat& distortion) {

double f_x = intrinsic.at<double>(0, 0);

double f_y = intrinsic.at<double>(1, 1);

double c_x = intrinsic.at<double>(0, 2);

double c_y = intrinsic.at<double>(1, 2);

// This was an error before:

// double c_x = intrinsic.at<double>(0, 3);

// double c_y = intrinsic.at<double>(1, 3);

// Step 1. Undistort

std::vector<cv::Point2d> points_undistorted;

assert(Z.size() == 1 || Z.size() == points.size());

if (!points.empty()) {

cv::undistortPoints(points, points_undistorted, intrinsic,

distortion, cv::noArray(), intrinsic);

}

// Step 2. Reproject

std::vector<cv::Point3d> result;

result.reserve(points.size());

for (size_t idx = 0; idx < points_undistorted.size(); ++idx) {

const double z = Z.size() == 1 ? Z[0] : Z[idx];

result.push_back(

cv::Point3d((points_undistorted[idx].x - c_x) / f_x * z,

(points_undistorted[idx].y - c_y) / f_y * z, z));

}

return result;

}

int main() {

const double f_x = 1000.0;

const double f_y = 1000.0;

const double c_x = 1000.0;

const double c_y = 1000.0;

const cv::Mat intrinsic =

(cv::Mat_<double>(3, 3) << f_x, 0.0, c_x, 0.0, f_y, c_y, 0.0, 0.0, 1.0);

const cv::Mat distortion =

// (cv::Mat_<double>(5, 1) << 0.0, 0.0, 0.0, 0.0); // This works!

(cv::Mat_<double>(5, 1) << -0.32, 1.24, 0.0013, 0.0013); // This doesn't!

// Single point test.

const cv::Point3d point_single(-10.0, 2.0, 12.0);

const cv::Point2d point_single_projected = Project({point_single}, intrinsic,

distortion)[0];

const cv::Point3d point_single_unprojected = Unproject({point_single_projected},

{point_single.z}, intrinsic, distortion)[0];

std::cout << "Expected Point: " << point_single.x;

std::cout << " " << point_single.y;

std::cout << " " << point_single.z << std::endl;

std::cout << "Computed Point: " << point_single_unprojected.x;

std::cout << " " << point_single_unprojected.y;

std::cout << " " << point_single_unprojected.z << std::endl;

}

相同的代码(Python)

import cv2

import numpy as np

def Project(points, intrinsic, distortion):

result = []

rvec = tvec = np.array([0.0, 0.0, 0.0])

if len(points) > 0:

result, _ = cv2.projectPoints(points, rvec, tvec,

intrinsic, distortion)

return np.squeeze(result, axis=1)

def Unproject(points, Z, intrinsic, distortion):

f_x = intrinsic[0, 0]

f_y = intrinsic[1, 1]

c_x = intrinsic[0, 2]

c_y = intrinsic[1, 2]

# This was an error before

# c_x = intrinsic[0, 3]

# c_y = intrinsic[1, 3]

# Step 1. Undistort.

points_undistorted = np.array([])

if len(points) > 0:

points_undistorted = cv2.undistortPoints(np.expand_dims(points, axis=1), intrinsic, distortion, P=intrinsic)

points_undistorted = np.squeeze(points_undistorted, axis=1)

# Step 2. Reproject.

result = []

for idx in range(points_undistorted.shape[0]):

z = Z[0] if len(Z) == 1 else Z[idx]

x = (points_undistorted[idx, 0] - c_x) / f_x * z

y = (points_undistorted[idx, 1] - c_y) / f_y * z

result.append([x, y, z])

return result

f_x = 1000.

f_y = 1000.

c_x = 1000.

c_y = 1000.

intrinsic = np.array([

[f_x, 0.0, c_x],

[0.0, f_y, c_y],

[0.0, 0.0, 1.0]

])

distortion = np.array([0.0, 0.0, 0.0, 0.0]) # This works!

distortion = np.array([-0.32, 1.24, 0.0013, 0.0013]) # This doesn't!

point_single = np.array([[-10.0, 2.0, 12.0],])

point_single_projected = Project(point_single, intrinsic, distortion)

Z = np.array([point[2] for point in point_single])

point_single_unprojected = Unproject(point_single_projected,

Z,

intrinsic, distortion)

print "Expected point:", point_single[0]

print "Computed point:", point_single_unprojected[0]

零失真(如上所述)的结果是正确的:

Expected Point: -10 2 12

Computed Point: -10 2 12

但是当包含失真时,结果为关闭:

Expected Point: -10 2 12

Computed Point: -4.26634 0.848872 12

更新1.澄清

这是一个用于图像投影的相机-我假设3D点位于相机框架坐标中。

更新2。找出第一个问题

好的,我知道f_x和f_y的减法-我很愚蠢,无法弄乱索引。更新了代码以进行更正。另一个问题仍然成立。

更新3.添加了Python等效代码

要增加可见性,请添加Python代码,因为它具有相同的错误。

1 个答案:

答案 0 :(得分:2)

问题2的答案

我发现了问题所在- 3D点坐标很重要!我以为无论选择什么3D坐标点,重建都可以解决。但是,我注意到了一个奇怪的事情:使用一系列3D点时,这些点中只有一部分被正确地重建。经过进一步调查,我发现只有在摄像机视场内的图像才能被正确地重建。视野是内在参数的函数(反之亦然)。

为使以上代码正常工作,请尝试按以下方式设置参数(本机内部是我的相机提供的):

...

const double f_x = 2746.;

const double f_y = 2748.;

const double c_x = 991.;

const double c_y = 619.;

...

const cv::Point3d point_single(10.0, -2.0, 30.0);

...

此外,请不要忘记在相机坐标中y的负坐标是UP:)

问题1的答案:

有一个错误,我试图使用它来访问内部函数

...

double f_x = intrinsic.at<double>(0, 0);

double f_y = intrinsic.at<double>(1, 1);

double c_x = intrinsic.at<double>(0, 3);

double c_y = intrinsic.at<double>(1, 3);

...

但是intrinsic是一个3x3矩阵。

故事的道德感 编写单元测试!!!

相关问题

最新问题

- 我写了这段代码,但我无法理解我的错误

- 我无法从一个代码实例的列表中删除 None 值,但我可以在另一个实例中。为什么它适用于一个细分市场而不适用于另一个细分市场?

- 是否有可能使 loadstring 不可能等于打印?卢阿

- java中的random.expovariate()

- Appscript 通过会议在 Google 日历中发送电子邮件和创建活动

- 为什么我的 Onclick 箭头功能在 React 中不起作用?

- 在此代码中是否有使用“this”的替代方法?

- 在 SQL Server 和 PostgreSQL 上查询,我如何从第一个表获得第二个表的可视化

- 每千个数字得到

- 更新了城市边界 KML 文件的来源?