如何使用Keras在Dense层中使用Dropout创建自动编码器

概念:

我正在尝试重建数字数据集的输出,为此我正在尝试使用自动编码器的不同方法。一种方法是在密集层中使用辍学。

问题:

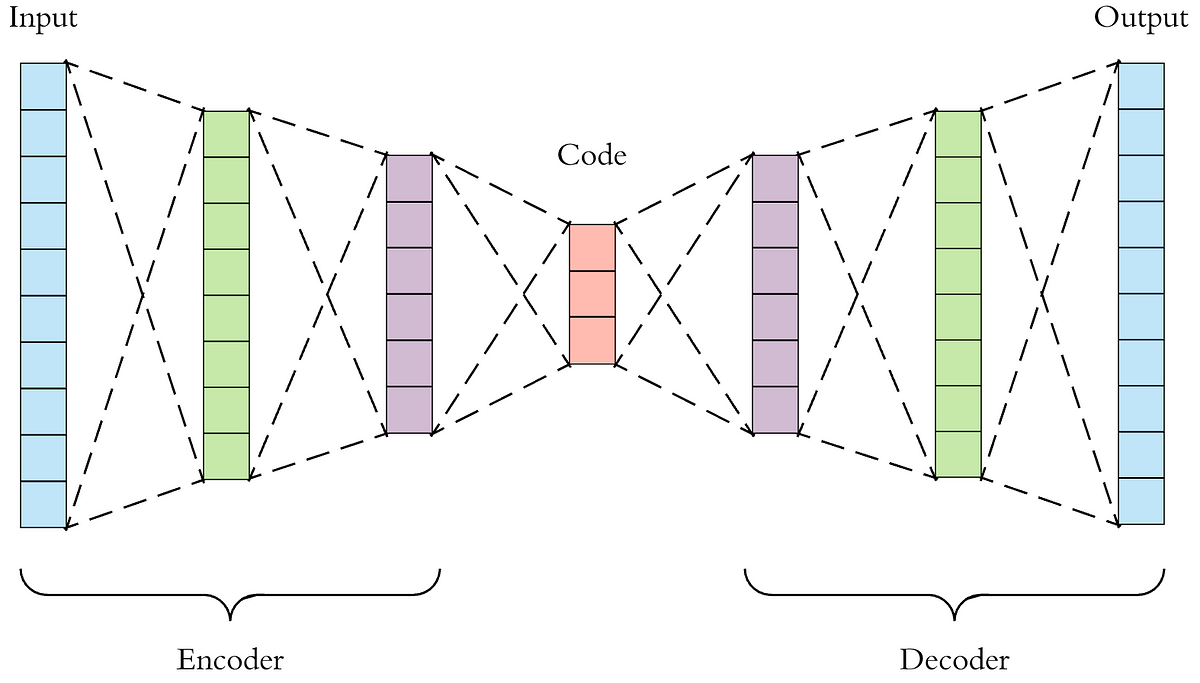

如使用编码器和解码器的2个部分所示,尺寸在中心减小。这是问题开始的地方,因为带有Dropout的密集层无法拾取。

请注意,这是我尝试使用自动编码器的第二种方法,我已经完成了here的演示。

这是我(天真)写的方式:

from keras import models

from keras import layers

from keras import backend as K

network = models.Sequential()

input_shape = x_train_clean.shape[1] # input_shape = 3714

outer_layer = int(input_shape / 7)

inner_layer = int(input_shape / 14)

network.add(Dropout(0.2, input_shape=(input_shape,)))

network.add(Dense(units=outer_layer, activation='relu'))

network.add(Dropout(0.2))

network.add(Dense(units=inner_layer, activation='relu'))

network.add(Dropout(0.2))

network.add(Dense(units=10, activation='linear'))

network.add(Dropout(0.2))

network.add(Dense(units=inner_layer, activation='relu'))

network.add(Dropout(0.2))

network.add(Dense(units=outer_layer, activation='relu'))

network.add(Dropout(0.2))

network.compile(loss=lambda true, pred: K.sqrt(K.mean(K.square(pred-true))), # RMSE

optimizer='rmsprop', # Root Mean Square Propagation

metrics=['accuracy']) # Accuracy performance metric

history = network.fit(x_train_noisy, # Features

x_train_clean, # Target vector

epochs=3, # Number of epochs

verbose=0, # No output

batch_size=100, # Number of observations per batch

shuffle=True,) # training data will be randomly shuffled at each epoch

输出:

输出错误非常清楚:

tensorflow.python.framework.errors_impl.InvalidArgumentError:不兼容的形状:[100,530]与[100,3714] [[{{node loss_1 / dropout_9_loss / sub}}]] 它无法从较低尺寸转换为较高尺寸。

悬而未决的问题:

- 是否甚至可以将Dropout用于自动编码器

- 如果是顺序问题,我可以尝试哪些可能的层

1 个答案:

答案 0 :(得分:0)

您看到的错误与网络的学习能力无关。 network.summary()揭示了输出形状为(None,530),而输入形状为(None,3714)导致训练时出错。

在训练过程中导致错误的输入:

x_train_noisy = np.zeros([100, 3714]) #just to test

x_train_clean = np.ones([100, 3714])

tensorflow.python.framework.errors_impl.InvalidArgumentError: Incompatible shapes: [100,530] vs. [100,3714]

训练无误的输入:

x_train_noisy = np.zeros([100, 3714]) #just to test

x_train_clean = np.ones([100, 530])

100/100 [==============================] - 1s 11ms/step - loss: 1.0000 - acc: 1.0000

相关问题

最新问题

- 我写了这段代码,但我无法理解我的错误

- 我无法从一个代码实例的列表中删除 None 值,但我可以在另一个实例中。为什么它适用于一个细分市场而不适用于另一个细分市场?

- 是否有可能使 loadstring 不可能等于打印?卢阿

- java中的random.expovariate()

- Appscript 通过会议在 Google 日历中发送电子邮件和创建活动

- 为什么我的 Onclick 箭头功能在 React 中不起作用?

- 在此代码中是否有使用“this”的替代方法?

- 在 SQL Server 和 PostgreSQL 上查询,我如何从第一个表获得第二个表的可视化

- 每千个数字得到

- 更新了城市边界 KML 文件的来源?