TensorflowејәеҢ–еӯҰд№ жЁЎеһӢеҮ д№ҺдёҚдјҡиҮӘе·ұеҒҡеҮәеҶіе®ҡпјҢд№ҹдёҚдјҡеӯҰд№ гҖӮ

жҲ‘жӯЈеңЁе°қиҜ•еҲӣе»әдёҖдёӘеҸҜд»Ҙиҙӯд№°пјҢеҮәе”®жҲ–жҢҒжңүиӮЎзҘЁеӨҙеҜёзҡ„ејәеҢ–еӯҰд№ д»ЈзҗҶгҖӮжҲ‘йҒҮеҲ°зҡ„й—®йўҳжҳҜпјҢеҚідҪҝи¶…иҝҮ2000йӣҶпјҢд»ЈзҗҶе•Ҷд»Қз„¶ж— жі•дәҶи§ЈдҪ•ж—¶иҙӯд№°пјҢеҮәе”®жҲ–жҢҒжңүгҖӮиҝҷжҳҜ第2100йӣҶзҡ„еӣҫзүҮпјҢиҜҰз»ҶиҜҙжҳҺдәҶжҲ‘зҡ„ж„ҸжҖқпјҢйҷӨйқһйҡҸжңәпјҢзү№е·ҘдёҚдјҡйҮҮеҸ–д»»дҪ•иЎҢеҠЁгҖӮ

д»ЈзҗҶеӯҰд№ дҪҝз”ЁйҮҚж’ӯеҶ…еӯҳпјҢ并且жҲ‘е·Із»ҸдёӨж¬Ўе’Ңдёүж¬ЎжЈҖжҹҘжҳҜеҗҰжІЎжңүй”ҷиҜҜгҖӮиҝҷжҳҜд»ЈзҗҶзҡ„д»Јз Ғпјҡ

В В В В е°ҶnumpyеҜје…Ҙдёәnp

В В В В е°ҶtensorflowдҪңдёәtfеҜје…Ҙ

В В В В йҡҸжңәеҜје…Ҙ

В В В В жқҘиҮӘ收и—Ҹе“ҒиҝӣеҸЈеҸҢз«ҜйҳҹеҲ—

В В В В д»Һ.agent import Agent

д»ЈзҗҶеӯҰд№ дҪҝз”ЁйҮҚж’ӯеҶ…еӯҳпјҢ并且жҲ‘е·Із»ҸдёӨж¬Ўе’Ңдёүж¬ЎжЈҖжҹҘжҳҜеҗҰжІЎжңүй”ҷиҜҜгҖӮиҝҷжҳҜд»ЈзҗҶзҡ„д»Јз Ғпјҡ

В В В В е°ҶnumpyеҜје…Ҙдёәnp

В В В В е°ҶtensorflowдҪңдёәtfеҜје…Ҙ

В В В В йҡҸжңәеҜје…Ҙ

В В В В жқҘиҮӘ收и—Ҹе“ҒиҝӣеҸЈеҸҢз«ҜйҳҹеҲ—

В В В В д»Һ.agent import Agent

class Agent(Agent):

def __init__(self, state_size = 7, window_size = 1, action_size = 3,

batch_size = 32, gamma=.95, epsilon=.95, epsilon_decay=.95, epsilon_min=.01,

learning_rate=.001, is_eval=False, model_name="", stock_name="", episode=1):

"""

state_size: Size of the state coming from the environment

action_size: How many decisions the algo will make in the end

gamma: Decay rate to discount future reward

epsilon: Rate of randomly decided action

epsilon_decay: Rate of decrease in epsilon

epsilon_min: The lowest epsilon can get (limit to the randomness)

learning_rate: Progress of neural net in each iteration

episodes: How many times data will be run through

"""

self.state_size = state_size

self.window_size = window_size

self.action_size = action_size

self.batch_size = batch_size

self.gamma = gamma

self.epsilon = epsilon

self.epsilon_decay = epsilon_decay

self.epsilon_min = epsilon_min

self.learning_rate = learning_rate

self.is_eval = is_eval

self.model_name = model_name

self.stock_name = stock_name

self.q_values = []

self.layers = [150, 150, 150]

tf.reset_default_graph()

self.sess = tf.Session(config=tf.ConfigProto(allow_soft_placement = True))

self.memory = deque()

if self.is_eval:

model_name = stock_name + "-" + str(episode)

self._model_init()

# "models/{}/{}/{}".format(stock_name, model_name, model_name + "-" + str(episode) + ".meta")

self.saver = tf.train.Saver()

self.saver.restore(self.sess, tf.train.latest_checkpoint("models/{}/{}".format(stock_name, model_name)))

# self.graph = tf.get_default_graph()

# names=[tensor.name for tensor in tf.get_default_graph().as_graph_def().node]

# self.X_input = self.graph.get_tensor_by_name("Inputs/Inputs:0")

# self.logits = self.graph.get_tensor_by_name("Output/Add:0")

else:

self._model_init()

self.sess.run(self.init)

self.saver = tf.train.Saver()

path = "models/{}/6".format(self.stock_name)

self.writer = tf.summary.FileWriter(path)

self.writer.add_graph(self.sess.graph)

def _model_init(self):

"""

Init tensorflow graph vars

"""

# (1,10,9)

with tf.device("/device:GPU:0"):

with tf.name_scope("Inputs"):

self.X_input = tf.placeholder(tf.float32, [None, self.state_size], name="Inputs")

self.Y_input = tf.placeholder(tf.float32, [None, self.action_size], name="Actions")

self.rewards = tf.placeholder(tf.float32, [None, ], name="Rewards")

# self.lstm_cells = [tf.contrib.rnn.GRUCell(num_units=layer)

# for layer in self.layers]

#lstm_cell = tf.contrib.rnn.LSTMCell(num_units=n_neurons, use_peepholes=True)

#gru_cell = tf.contrib.rnn.GRUCell(num_units=n_neurons)

# self.multi_cell = tf.contrib.rnn.MultiRNNCell(self.lstm_cells)

# self.outputs, self.states = tf.nn.dynamic_rnn(self.multi_cell, self.X_input, dtype=tf.float32)

# self.top_layer_h_state = self.states[-1]

# with tf.name_scope("Output"):

# self.out_weights=tf.Variable(tf.truncated_normal([self.layers[-1], self.action_size]))

# self.out_bias=tf.Variable(tf.zeros([self.action_size]))

# self.logits = tf.add(tf.matmul(self.top_layer_h_state,self.out_weights), self.out_bias)

fc1 = tf.contrib.layers.fully_connected(self.X_input, 512, activation_fn=tf.nn.relu)

fc2 = tf.contrib.layers.fully_connected(fc1, 512, activation_fn=tf.nn.relu)

fc3 = tf.contrib.layers.fully_connected(fc2, 512, activation_fn=tf.nn.relu)

fc4 = tf.contrib.layers.fully_connected(fc3, 512, activation_fn=tf.nn.relu)

self.logits = tf.contrib.layers.fully_connected(fc4, self.action_size, activation_fn=None)

with tf.name_scope("Cross_Entropy"):

self.loss_op = tf.losses.mean_squared_error(self.Y_input,self.logits)

self.optimizer = tf.train.RMSPropOptimizer(learning_rate=self.learning_rate)

self.train_op = self.optimizer.minimize(self.loss_op)

# self.correct = tf.nn.in_top_k(self.logits, self.Y_input, 1)

# self.accuracy = tf.reduce_mean(tf.cast(self., tf.float32))

tf.summary.scalar("Reward", tf.reduce_mean(self.rewards))

tf.summary.scalar("MSE", self.loss_op)

# Merge all of the summaries

self.summ = tf.summary.merge_all()

self.init = tf.global_variables_initializer()

def remember(self, state, action, reward, next_state, done):

self.memory.append((state, action, reward, next_state, done))

def act(self, state):

if np.random.rand() <= self.epsilon and not self.is_eval:

prediction = random.randrange(self.action_size)

if prediction == 1 or prediction == 2:

print("Random")

return prediction

act_values = self.sess.run(self.logits, feed_dict={self.X_input: state.reshape((1, self.state_size))})

if np.argmax(act_values[0]) == 1 or np.argmax(act_values[0]) == 2:

pass

return np.argmax(act_values[0])

def replay(self, time, episode):

print("Replaying")

mini_batch = []

l = len(self.memory)

for i in range(l - self.batch_size + 1, l):

mini_batch.append(self.memory[i])

mean_reward = []

x = np.zeros((self.batch_size, self.state_size))

y = np.zeros((self.batch_size, self.action_size))

for i, (state, action, reward, next_state, done) in enumerate(mini_batch):

target = reward

if not done:

self.target = reward + self.gamma * np.amax(self.sess.run(self.logits, feed_dict = {self.X_input: next_state.reshape((1, self.state_size))})[0])

current_q = (self.sess.run(self.logits, feed_dict={self.X_input: state.reshape((1, self.state_size))}))

current_q[0][action] = self.target

x[i] = state

y[i] = current_q.reshape((self.action_size))

mean_reward.append(self.target)

#target_f = np.array(target_f).reshape(self.batch_size - 1, self.action_size)

#target_state = np.array(target_state).reshape(self.batch_size - 1, self.window_size, self.state_size)

_, c, s = self.sess.run([self.train_op, self.loss_op, self.summ], feed_dict={self.X_input: x, self.Y_input: y, self.rewards: mean_reward}) # Add self.summ into the sess.run for tensorboard

self.writer.add_summary(s, global_step=(episode+1)/(time+1))

if self.epsilon > self.epsilon_min:

self.epsilon *= self.epsilon_decay

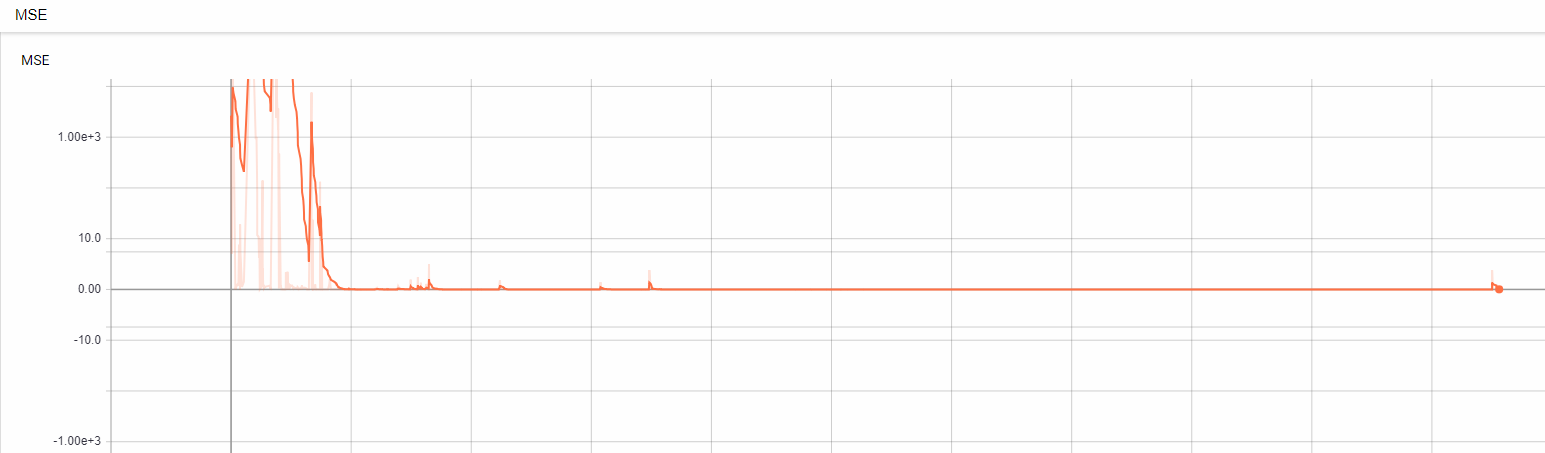

дёҖж—ҰйҮҚж’ӯеҶ…еӯҳеӨ§дәҺжү№еӨ„зҗҶеӨ§е°ҸпјҢе®ғе°ҶиҝҗиЎҢйҮҚж’ӯеҠҹиғҪгҖӮиҜҘд»Јз ҒеҸҜиғҪзңӢиө·жқҘжңүдәӣж··д№ұпјҢеӣ дёәжҲ‘дёҖзӣҙжғіеј„жё…жҘҡе®ғе·ІжңүеҘҪеҮ еӨ©дәҶгҖӮиҝҷжҳҜеј йҮҸжқҝзҡ„MSEеұҸ幕жҲӘеӣҫгҖӮ

еҰӮжӮЁжүҖи§ҒпјҢ第200йӣҶж—¶пјҢMSEжӯ»дәЎдёә0жҲ–еҮ д№Һдёә0гҖӮжҲ‘еҫҲеӣ°жғ‘пјҒжҲ‘дёҚзҹҘйҒ“еҸ‘з”ҹдәҶд»Җд№ҲгҖӮиҜ·её®жҲ‘и§ЈеҶіиҝҷдёӘй—®йўҳгҖӮе°Ҷд»Јз ҒеҸ‘еёғеңЁhereдёҠпјҢд»ҘжҹҘзңӢеҢ…жӢ¬зҒ«иҪҰе’ҢиҜ„дј°ж–Ү件еңЁеҶ…зҡ„е…ЁйғЁеҶ…е®№гҖӮжҲ‘дёҖзӣҙеңЁе·ҘдҪңзҡ„дё»иҰҒд»ЈзҗҶжҳҜthaд»ЈзҗҶж–Ү件еӨ№дёӯзҡ„LSTM.pyгҖӮи°ўи°ўпјҒ

еҰӮжӮЁжүҖи§ҒпјҢ第200йӣҶж—¶пјҢMSEжӯ»дәЎдёә0жҲ–еҮ д№Һдёә0гҖӮжҲ‘еҫҲеӣ°жғ‘пјҒжҲ‘дёҚзҹҘйҒ“еҸ‘з”ҹдәҶд»Җд№ҲгҖӮиҜ·её®жҲ‘и§ЈеҶіиҝҷдёӘй—®йўҳгҖӮе°Ҷд»Јз ҒеҸ‘еёғеңЁhereдёҠпјҢд»ҘжҹҘзңӢеҢ…жӢ¬зҒ«иҪҰе’ҢиҜ„дј°ж–Ү件еңЁеҶ…зҡ„е…ЁйғЁеҶ…е®№гҖӮжҲ‘дёҖзӣҙеңЁе·ҘдҪңзҡ„дё»иҰҒд»ЈзҗҶжҳҜthaд»ЈзҗҶж–Ү件еӨ№дёӯзҡ„LSTM.pyгҖӮи°ўи°ўпјҒ

2 дёӘзӯ”жЎҲ:

зӯ”жЎҲ 0 :(еҫ—еҲҶпјҡ1)

жӯЈеҰӮиҜҘй—®йўҳзҡ„иҜ„и®әжүҖиҝ°пјҢиҝҷдјјд№ҺжҳҜеӯҰд№ зҺҮдёӢйҷҚзҡ„й—®йўҳгҖӮ

жң¬иҙЁдёҠпјҢжҜҸйӣҶпјҢжӮЁзҡ„еӯҰд№ зҺҮйғҪд№ҳд»ҘжҹҗдёӘеӣ еӯҗ j пјҢиҝҷж„Ҹе‘ізқҖ n дёӘйӣҶ/зәӘе…ғд№ӢеҗҺзҡ„еӯҰд№ зҺҮе°ҶзӯүдәҺ

lr = initial_lr * j ^ n гҖӮеңЁжҲ‘们зҡ„зӨәдҫӢдёӯпјҢиЎ°еҮҸи®ҫзҪ®дёә0.95пјҢиҝҷж„Ҹе‘ізқҖд»…з»ҸиҝҮеҮ ж¬Ўиҝӯд»ЈпјҢеӯҰд№ зҺҮе°ұе·Із»ҸеӨ§еӨ§дёӢйҷҚгҖӮйҡҸеҗҺпјҢжӣҙж–°е°Ҷд»…жү§иЎҢеҫ®е°Ҹзҡ„жӣҙжӯЈпјҢиҖҢдёҚеҶҚвҖңеӯҰд№ вҖқйқһеёёйҮҚиҰҒзҡ„жӣҙж”№гҖӮ

иҝҷеҜјиҮҙдәҶдёҖдёӘй—®йўҳпјҡдёәд»Җд№ҲиЎ°еҮҸе®Ңе…Ёжңүж„Ҹд№үпјҹдёҖиҲ¬жқҘиҜҙпјҢжҲ‘们иҰҒиҫҫеҲ°еұҖйғЁжңҖдјҳпјҲеҸҜиғҪйқһеёёзӢӯзӘ„пјүгҖӮдёәжӯӨпјҢжҲ‘们е°қиҜ•дҪҝвҖңзӣёеҜ№жҺҘиҝ‘вҖқеҲ°иҝҷж ·зҡ„жңҖе°ҸеҖјпјҢ然еҗҺд»…еҒҡиҫғе°Ҹзҡ„еўһйҮҸдҪҝжҲ‘们иҫҫеҲ°жӯӨжңҖдҪіеҖјгҖӮеҰӮжһңжҲ‘们еҸӘжҳҜ继з»ӯдҝқжҢҒеҺҹе§Ӣзҡ„еӯҰд№ йҖҹеәҰпјҢйӮЈеҸҜиғҪжҳҜжҲ‘们жҜҸж¬ЎйғҪеҸӘжҳҜз®ҖеҚ•ең°и·іиҝҮжңҖдҪіи§ЈеҶіж–№жЎҲпјҢиҖҢд»ҺжңӘиҫҫеҲ°жҲ‘们зҡ„зӣ®ж ҮгҖӮ

д»Һи§Ҷи§үдёҠзңӢпјҢеҸҜд»ҘйҖҡиҝҮд»ҘдёӢеӣҫеҪўжҖ»з»“й—®йўҳпјҡ

йҷӨиЎ°еҮҸд№ӢеӨ–зҡ„еҸҰдёҖз§Қж–№жі•жҳҜпјҢдёҖж—Ұз®—жі•дёҚеҶҚиҫҫеҲ°д»»дҪ•йҮҚиҰҒзҡ„жӣҙж–°пјҢе°ұз®ҖеҚ•ең°е°ҶеӯҰд№ йҖҹзҺҮйҷҚдҪҺдёҖе®ҡйҮҸгҖӮиҝҷж ·еҸҜд»ҘйҒҝе…ҚеҮәзҺ°еҫҲеӨҡжғ…иҠӮиҖҢзәҜзІ№йҷҚдҪҺеӯҰд№ зҺҮзҡ„й—®йўҳгҖӮ

зү№еҲ«жҳҜеңЁжӮЁзҡ„жғ…еҶөдёӢпјҢиҫғй«ҳзҡ„иЎ°еҮҸеҖјпјҲеҚіиҫғж…ўзҡ„иЎ°еҮҸпјүдјјд№Һе·Із»Ҹиө·еҲ°дәҶеҫҲеӨ§дҪңз”ЁгҖӮ

зӯ”жЎҲ 1 :(еҫ—еҲҶпјҡ0)

ејәеҢ–еӯҰд№ дёӯзҡ„QеҖјдёҚд»ЈиЎЁвҖңеҘ–еҠұвҖқпјҢиҖҢжҳҜд»ЈиЎЁвҖңеӣһжҠҘвҖқпјҢеҚіеҪ“еүҚеҘ–еҠұе’ҢжңӘжқҘжҠҳжүЈзҡ„жҖ»е’ҢгҖӮеҪ“жӮЁзҡ„жЁЎеһӢиҝӣе…ҘжүҖжңүйӣ¶еҠЁдҪңзҡ„вҖңжӯ»и§’вҖқж—¶пјҢж №жҚ®жӮЁзҡ„и®ҫзҪ®пјҢеҘ–еҠұе°Ҷдёәйӣ¶гҖӮ然еҗҺз»ҸиҝҮдёҖж®өж—¶й—ҙпјҢжӮЁзҡ„йҮҚж’ӯе°Ҷе……ж»ЎвҖңйӣ¶зҡ„еҠЁдҪңдјҡеёҰжқҘйӣ¶зҡ„еӣһжҠҘвҖқзҡ„и®°еҝҶпјҢеӣ жӯӨпјҢж— и®әжӮЁеҰӮдҪ•жӣҙж–°жЁЎеһӢпјҢйғҪж— жі•ж‘Ҷи„ұиҝҷз§Қжӯ»иғЎеҗҢгҖӮ

жӯЈеҰӮ@dennlingerжүҖиҜҙпјҢжӮЁеҸҜд»ҘеўһеҠ epsilonд»ҘдҪҝжЁЎеһӢжңүдёҖдәӣж–°зҡ„и®°еҝҶиҰҒжӣҙж–°пјҢд№ҹеҸҜд»ҘдҪҝз”Ёдјҳе…Ҳзә§дҪ“йӘҢйҮҚж’ӯжқҘи®ӯз»ғвҖңжңүз”ЁвҖқзҡ„дҪ“йӘҢгҖӮ

дҪҶжҳҜпјҢжҲ‘е»әи®®жӮЁйҰ–е…Ҳе…іжіЁзҺҜеўғжң¬иә«гҖӮжӮЁзҡ„жЁЎеһӢиҫ“еҮәйӣ¶пјҢеӣ дёәжІЎжңүжӣҙеҘҪзҡ„йҖүжӢ©пјҢеҜ№еҗ—пјҹжӯЈеҰӮжӮЁжүҖиҜҙзҡ„пјҢжӮЁеңЁдәӨжҳ“иӮЎзҘЁж—¶пјҢжӮЁзЎ®е®ҡжңүи¶іеӨҹзҡ„дҝЎжҒҜеҸҜд»ҘеҜјиҮҙдёҖз§Қзӯ–з•ҘпјҢиҜҘзӯ–з•Ҙе°ҶеҜјиҮҙжӮЁиҺ·еҫ—еӨ§дәҺйӣ¶зҡ„еҘ–еҠұеҗ—пјҹжҲ‘и®ӨдёәжӮЁйңҖиҰҒеҜ№жӯӨиҝӣиЎҢд»»дҪ•и°ғж•ҙд№ӢеүҚпјҢйҰ–е…ҲиҰҒд»”з»ҶиҖғиҷ‘дёҖдёӢгҖӮдҫӢеҰӮпјҢеҰӮжһңиӮЎзҘЁд»ҘзәҜзІ№зҡ„йҡҸжңә50/50жңәдјҡдёҠж¶ЁжҲ–дёӢи·ҢпјҢйӮЈд№ҲжӮЁе°Ҷж°ёиҝңжүҫдёҚеҲ°иғҪеӨҹдҪҝе№іеқҮеӣһжҠҘеӨ§дәҺйӣ¶зҡ„зӯ–з•ҘгҖӮ

ејәеҢ–еӯҰд№ д»ЈзҗҶеҸҜиғҪе·Із»ҸжүҫеҲ°дәҶжңҖеҘҪзҡ„д»ЈзҗҶпјҢе°Ҫз®ЎиҝҷдёҚжҳҜжӮЁжғіиҰҒзҡ„гҖӮ

- еңЁQ LearningдёӯпјҢдҪ жҖҺд№ҲиғҪзңҹжӯЈиҺ·еҫ—QеҖјпјҹ QпјҲsпјҢaпјүдёҚдјҡж°ёиҝң继з»ӯдёӢеҺ»еҗ—пјҹ

- еңЁopenai cartpoleдёҠи®ӯз»ғеј йҮҸжөҒжЁЎеһӢ

- KerasжЁЎеһӢиҫ“еҮәеёёж•°еҖјдҪңдёәйў„жөӢ

- д»Һеј йҮҸжөҒжЁЎеһӢдёӯйҖүжӢ©еҠЁдҪңзҡ„жқғйҮҚ

- еҰӮдҪ•дҪҝз”ЁtfдјҡиҜқеҹәдәҺзҪ‘з»ңиҫ“еҮәжӣҙж–°жёёжҲҸзҠ¶жҖҒд»ҘиҝӣиЎҢејәеҢ–еӯҰд№

- TensorflowејәеҢ–еӯҰд№ жЁЎеһӢеҮ д№ҺдёҚдјҡиҮӘе·ұеҒҡеҮәеҶіе®ҡпјҢд№ҹдёҚдјҡеӯҰд№ гҖӮ

- д»·еҖјиҝӯд»Јж— жі•ж”¶ж•ӣ-马尔еҸҜеӨ«еҶізӯ–иҝҮзЁӢ

- A2Cж— жі•жӯЈеёёиҝҗиЎҢпјҢеҺҹеӣ жҳҜжү№иҜ„家зҡ„жҚҹеӨұжІЎжңү收ж•ӣ

- DQNз®—жі•ж— жі•еңЁCartPole-v0дёҠ收ж•ӣ

- еҰӮдҪ•е»әз«ӢеҶізӯ–ж ‘еӣһеҪ’жЁЎеһӢ

- жҲ‘еҶҷдәҶиҝҷж®өд»Јз ҒпјҢдҪҶжҲ‘ж— жі•зҗҶи§ЈжҲ‘зҡ„й”ҷиҜҜ

- жҲ‘ж— жі•д»ҺдёҖдёӘд»Јз Ғе®һдҫӢзҡ„еҲ—иЎЁдёӯеҲ йҷӨ None еҖјпјҢдҪҶжҲ‘еҸҜд»ҘеңЁеҸҰдёҖдёӘе®һдҫӢдёӯгҖӮдёәд»Җд№Ҳе®ғйҖӮз”ЁдәҺдёҖдёӘз»ҶеҲҶеёӮеңәиҖҢдёҚйҖӮз”ЁдәҺеҸҰдёҖдёӘз»ҶеҲҶеёӮеңәпјҹ

- жҳҜеҗҰжңүеҸҜиғҪдҪҝ loadstring дёҚеҸҜиғҪзӯүдәҺжү“еҚ°пјҹеҚўйҳҝ

- javaдёӯзҡ„random.expovariate()

- Appscript йҖҡиҝҮдјҡи®®еңЁ Google ж—ҘеҺҶдёӯеҸ‘йҖҒз”өеӯҗйӮ®д»¶е’ҢеҲӣе»әжҙ»еҠЁ

- дёәд»Җд№ҲжҲ‘зҡ„ Onclick з®ӯеӨҙеҠҹиғҪеңЁ React дёӯдёҚиө·дҪңз”Ёпјҹ

- еңЁжӯӨд»Јз ҒдёӯжҳҜеҗҰжңүдҪҝз”ЁвҖңthisвҖқзҡ„жӣҝд»Јж–№жі•пјҹ

- еңЁ SQL Server е’Ң PostgreSQL дёҠжҹҘиҜўпјҢжҲ‘еҰӮдҪ•д»Һ第дёҖдёӘиЎЁиҺ·еҫ—第дәҢдёӘиЎЁзҡ„еҸҜи§ҶеҢ–

- жҜҸеҚғдёӘж•°еӯ—еҫ—еҲ°

- жӣҙж–°дәҶеҹҺеёӮиҫ№з•Ң KML ж–Ү件зҡ„жқҘжәҗпјҹ