解压缩类似文件的文件

给出3元组行的文本文件:

(0, 12, Tokenization)

(13, 15, is)

(16, 22, widely)

(23, 31, regarded)

(32, 34, as)

(35, 36, a)

(37, 43, solved)

(44, 51, problem)

(52, 55, due)

(56, 58, to)

(59, 62, the)

(63, 67, high)

(68, 76, accuracy)

(77, 81, that)

(82, 91, rulebased)

(92, 102, tokenizers)

(103, 110, achieve)

(110, 111, .)

(0, 3, But)

(4, 14, rule-based)

(15, 25, tokenizers)

(26, 29, are)

(30, 34, hard)

(35, 37, to)

(38, 46, maintain)

(47, 50, and)

(51, 56, their)

(57, 62, rules)

(63, 71, language)

(72, 80, specific)

(80, 81, .)

(0, 2, We)

(3, 7, show)

(8, 12, that)

(13, 17, high)

(18, 26, accuracy)

(27, 31, word)

(32, 35, and)

(36, 44, sentence)

(45, 57, segmentation)

(58, 61, can)

(62, 64, be)

(65, 73, achieved)

(74, 76, by)

(77, 82, using)

(83, 93, supervised)

(94, 102, sequence)

(103, 111, labeling)

(112, 114, on)

(115, 118, the)

(119, 128, character)

(129, 134, level)

(135, 143, combined)

(144, 148, with)

(149, 161, unsupervised)

(162, 169, feature)

(170, 178, learning)

(178, 179, .)

(0, 2, We)

(3, 12, evaluated)

(13, 16, our)

(17, 23, method)

(24, 26, on)

(27, 32, three)

(33, 42, languages)

(43, 46, and)

(47, 55, obtained)

(56, 61, error)

(62, 67, rates)

(68, 70, of)

(71, 75, 0.27)

(76, 77, ‰)

(78, 79, ()

(79, 86, English)

(86, 87, ))

(87, 88, ,)

(89, 93, 0.35)

(94, 95, ‰)

(96, 97, ()

(97, 102, Dutch)

(102, 103, ))

(104, 107, and)

(108, 112, 0.76)

(113, 114, ‰)

(115, 116, ()

(116, 123, Italian)

(123, 124, ))

(125, 128, for)

(129, 132, our)

(133, 137, best)

(138, 144, models)

(144, 145, .)

目标是实现两种不同的数据类型:

-

sents_with_positions:元组列表,其中元组看起来像文本文件的每一行 -

sents_words:由文本文件的每一行中的元组中仅第三个元素组成的字符串列表

E.g。从输入文本文件:

sents_words = [

('Tokenization', 'is', 'widely', 'regarded', 'as', 'a', 'solved',

'problem', 'due', 'to', 'the', 'high', 'accuracy', 'that', 'rulebased',

'tokenizers', 'achieve', '.'),

('But', 'rule-based', 'tokenizers', 'are', 'hard', 'to', 'maintain', 'and',

'their', 'rules', 'language', 'specific', '.'),

('We', 'show', 'that', 'high', 'accuracy', 'word', 'and', 'sentence',

'segmentation', 'can', 'be', 'achieved', 'by', 'using', 'supervised',

'sequence', 'labeling', 'on', 'the', 'character', 'level', 'combined',

'with', 'unsupervised', 'feature', 'learning', '.')

]

sents_with_positions = [

[(0, 12, 'Tokenization'), (13, 15, 'is'), (16, 22, 'widely'),

(23, 31, 'regarded'), (32, 34, 'as'), (35, 36, 'a'), (37, 43, 'solved'),

(44, 51, 'problem'), (52, 55, 'due'), (56, 58, 'to'), (59, 62, 'the'),

(63, 67, 'high'), (68, 76, 'accuracy'), (77, 81, 'that'),

(82, 91, 'rulebased'), (92, 102, 'tokenizers'), (103, 110, 'achieve'),

(110, 111, '.')],

[(0, 3, 'But'), (4, 14, 'rule-based'), (15, 25, 'tokenizers'),

(26, 29, 'are'), (30, 34, 'hard'), (35, 37, 'to'), (38, 46, 'maintain'),

(47, 50, 'and'), (51, 56, 'their'), (57, 62, 'rules'),

(63, 71, 'language'), (72, 80, 'specific'), (80, 81, '.')],

[(0, 2, 'We'), (3, 7, 'show'), (8, 12, 'that'), (13, 17, 'high'),

(18, 26, 'accuracy'), (27, 31, 'word'), (32, 35, 'and'),

(36, 44, 'sentence'), (45, 57, 'segmentation'), (58, 61, 'can'),

(62, 64, 'be'), (65, 73, 'achieved'), (74, 76, 'by'), (77, 82, 'using'),

(83, 93, 'supervised'), (94, 102, 'sequence'), (103, 111, 'labeling'),

(112, 114, 'on'), (115, 118, 'the'), (119, 128, 'character'),

(129, 134, 'level'), (135, 143, 'combined'), (144, 148, 'with'),

(149, 161, 'unsupervised'), (162, 169, 'feature'), (170, 178, 'learning'),

(178, 179, '.')]

]

我一直这样做:

- 遍历文本文件的每一行,处理元组,然后将它们附加到列表中以获取

sents_with_positions - 并在将每个句子句子附加到

sents_with_positions时,我将每个句子的元组的最后元素追加到sents_words

代码:

sents_with_positions = []

sents_words = []

_sent = []

for line in _input.split('\n'):

if len(line.strip()) > 0:

line = line[1:-1]

start, _, next = line.partition(',')

end, _, next = next.partition(',')

text = next.strip()

_sent.append((int(start), int(end), text))

else:

sents_with_positions.append(_sent)

sents_words.append(list(zip(*_sent))[2])

_sent = []

但有没有更简单的方法或更简洁的方法来实现相同的输出?也许通过正则表达式?或者一些itertools技巧?

请注意,有些情况下文本文件的行中存在棘手的元组,例如

-

(86, 87, ))#有时候令牌/单词是一个括号 -

(96, 97, () -

(87, 88, ,)#有时令牌/单词是逗号 -

(29, 33, Café)#令牌/单词是unicode(有时是重音),因此[a-zA-Z]可能不够 -

(2, 3, 2)#有时候令牌/单词是数字 -

(47, 52, 3,000)#有时候令牌/单词是带逗号 的数字/单词

-

(23, 29, (e.g.))#Someimtes the token / word contains bracket。

7 个答案:

答案 0 :(得分:7)

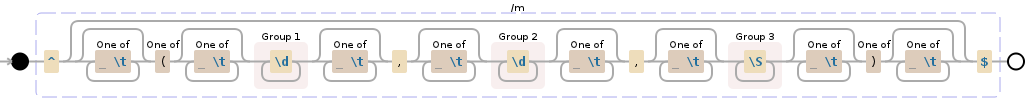

在我看来,这是一个更具可读性和清晰度,但它可能性能稍差并假设输入文件格式正确(例如空行真的是空的,而你的代码即使有一些也可以工作"空"行中的随机空格。它利用正则表达式组,它们完成解析行的所有工作,我们只是将开始和结束转换为整数。

line_regex = re.compile('^\((\d+), (\d+), (.+)\)$', re.MULTILINE)

sents_with_positions = []

sents_words = []

for section in _input.split('\n\n'):

words_with_positions = [

(int(start), int(end), text)

for start, end, text in line_regex.findall(section)

]

words = tuple(t[2] for t in words_with_positions)

sents_with_positions.append(words_with_positions)

sents_words.append(words)

答案 1 :(得分:5)

以一些分隔符分隔的块解析文本文件是一个常见问题。

它有助于实现一个效用函数,例如下面的open_chunk,它可以" chunkify"给出正则表达式分隔符的文本文件。 open_chunk函数一次生成一个块,而不一次读取整个文件,因此可以在任何大小的文件上使用。一旦您确定了块,处理每个块相对容易:

import re

def open_chunk(readfunc, delimiter, chunksize=1024):

"""

readfunc(chunksize) should return a string.

http://stackoverflow.com/a/17508761/190597 (unutbu)

"""

remainder = ''

for chunk in iter(lambda: readfunc(chunksize), ''):

pieces = re.split(delimiter, remainder + chunk)

for piece in pieces[:-1]:

yield piece

remainder = pieces[-1]

if remainder:

yield remainder

sents_with_positions = []

sents_words = []

with open('data') as infile:

for chunk in open_chunk(infile.read, r'\n\n'):

row = []

words = []

# Taken from LeartS's answer: http://stackoverflow.com/a/34416814/190597

for start, end, word in re.findall(

r'\((\d+),\s*(\d+),\s*(.*)\)', chunk, re.MULTILINE):

start, end = int(start), int(end)

row.append((start, end, word))

words.append(word)

sents_with_positions.append(row)

sents_words.append(words)

print(sents_words)

print(sents_with_positions)

产生的输出包括

(86, 87, ')'), (87, 88, ','), (96, 97, '(')

答案 2 :(得分:4)

如果您使用的是python 3并且不介意(87, 88, ,)成为('87', '88', ''),则可以使用csv.reader来解析删除外部()的值切片:

from itertools import groupby

from csv import reader

def yield_secs(fle):

with open(fle) as f:

for k, v in groupby(map(str.rstrip, f), key=lambda x: x.strip() != ""):

if k:

tmp1, tmp2 = [], []

for t in v:

a, b, c, *_ = next(reader([t[1:-1]], skipinitialspace=True))

tmp1.append((a,b,c))

tmp2.append(c)

yield tmp1, tmp2

for sec in yield_secs("test.txt"):

print(sec)

您可以使用if not c:c = ","作为空字符串的唯一方法进行修复,如果它是,,那么您将获得('87', '88', ',')。

对于python2,您只需要对前三个元素进行切片以避免解包错误:

from itertools import groupby, imap

def yield_secs(fle):

with open(fle) as f:

for k, v in groupby(imap(str.rstrip, f), key=lambda x: x.strip() != ""):

if k:

tmp1, tmp2 = [], []

for t in v:

t = next(reader([t[1:-1]], skipinitialspace=True))

tmp1.append(tuple(t[:3]))

tmp2.append(t[0])

yield tmp1, tmp2

如果您想一次获得所有数据:

def yield_secs(fle):

with open(fle) as f:

sent_word, sent_with_position = [], []

for k, v in groupby(map(str.rstrip, f), key=lambda x: x.strip() != ""):

if k:

tmp1, tmp2 = [], []

for t in v:

a, b, c, *_ = next(reader([t[1:-1]], skipinitialspace=True))

tmp1.append((a, b, c))

tmp2.append(c)

sent_word.append(tmp2)

sent_with_position.append(tmp1)

return sent_word, sent_with_position

sent, sent_word = yield_secs("test.txt")

你实际上可以通过仅拆分并保留任何逗号来实现,因为它只能出现在最后,所以t[1:-1].split(", ")只会在前两个逗号上分开:

def yield_secs(fle):

with open(fle) as f:

sent_word, sent_with_position = [], []

for k, v in groupby(map(str.rstrip, f), key=lambda x: x.strip() != ""):

if k:

tmp1, tmp2 = [], []

for t in v:

a, b, c, *_ = t[1:-1].split(", ")

tmp1.append((a, b, c))

tmp2.append(c)

sent_word.append(tmp2)

sent_with_position.append(tmp1)

return sent_word, sent_with_position

snt, snt_pos = (yield_secs())

from pprint import pprint

pprint(snt)

pprint(snt_pos)

哪个会给你:

[['Tokenization',

'is',

'widely',

'regarded',

'as',

'a',

'solved',

'problem',

'due',

'to',

'the',

'high',

'accuracy',

'that',

'rulebased',

'tokenizers',

'achieve',

'.'],

['But',

'rule-based',

'tokenizers',

'are',

'hard',

'to',

'maintain',

'and',

'their',

'rules',

'language',

'specific',

'.'],

['We',

'show',

'that',

'high',

'accuracy',

'word',

'and',

'sentence',

'segmentation',

'can',

'be',

'achieved',

'by',

'using',

'supervised',

'sequence',

'labeling',

'on',

'the',

'character',

'level',

'combined',

'with',

'unsupervised',

'feature',

'learning',

'.'],

['We',

'evaluated',

'our',

'method',

'on',

'three',

'languages',

'and',

'obtained',

'error',

'rates',

'of',

'0.27',

'‰',

'(',

'English',

')',

',',

'0.35',

'‰',

'(',

'Dutch',

')',

'and',

'0.76',

'‰',

'(',

'Italian',

')',

'for',

'our',

'best',

'models',

'.']]

[[('0', '12', 'Tokenization'),

('13', '15', 'is'),

('16', '22', 'widely'),

('23', '31', 'regarded'),

('32', '34', 'as'),

('35', '36', 'a'),

('37', '43', 'solved'),

('44', '51', 'problem'),

('52', '55', 'due'),

('56', '58', 'to'),

('59', '62', 'the'),

('63', '67', 'high'),

('68', '76', 'accuracy'),

('77', '81', 'that'),

('82', '91', 'rulebased'),

('92', '102', 'tokenizers'),

('103', '110', 'achieve'),

('110', '111', '.')],

[('0', '3', 'But'),

('4', '14', 'rule-based'),

('15', '25', 'tokenizers'),

('26', '29', 'are'),

('30', '34', 'hard'),

('35', '37', 'to'),

('38', '46', 'maintain'),

('47', '50', 'and'),

('51', '56', 'their'),

('57', '62', 'rules'),

('63', '71', 'language'),

('72', '80', 'specific'),

('80', '81', '.')],

[('0', '2', 'We'),

('3', '7', 'show'),

('8', '12', 'that'),

('13', '17', 'high'),

('18', '26', 'accuracy'),

('27', '31', 'word'),

('32', '35', 'and'),

('36', '44', 'sentence'),

('45', '57', 'segmentation'),

('58', '61', 'can'),

('62', '64', 'be'),

('65', '73', 'achieved'),

('74', '76', 'by'),

('77', '82', 'using'),

('83', '93', 'supervised'),

('94', '102', 'sequence'),

('103', '111', 'labeling'),

('112', '114', 'on'),

('115', '118', 'the'),

('119', '128', 'character'),

('129', '134', 'level'),

('135', '143', 'combined'),

('144', '148', 'with'),

('149', '161', 'unsupervised'),

('162', '169', 'feature'),

('170', '178', 'learning'),

('178', '179', '.')],

[('0', '2', 'We'),

('3', '12', 'evaluated'),

('13', '16', 'our'),

('17', '23', 'method'),

('24', '26', 'on'),

('27', '32', 'three'),

('33', '42', 'languages'),

('43', '46', 'and'),

('47', '55', 'obtained'),

('56', '61', 'error'),

('62', '67', 'rates'),

('68', '70', 'of'),

('71', '75', '0.27'),

('76', '77', '‰'),

('78', '79', '('),

('79', '86', 'English'),

('86', '87', ')'),

('87', '88', ','),

('89', '93', '0.35'),

('94', '95', '‰'),

('96', '97', '('),

('97', '102', 'Dutch'),

('102', '103', ')'),

('104', '107', 'and'),

('108', '112', '0.76'),

('113', '114', '‰'),

('115', '116', '('),

('116', '123', 'Italian'),

('123', '124', ')'),

('125', '128', 'for'),

('129', '132', 'our'),

('133', '137', 'best'),

('138', '144', 'models'),

('144', '145', '.')]]

答案 3 :(得分:3)

您可以使用正则表达式和deque,这在您处理大型文件时更加优化:

import re

from collections import deque

sents_with_positions = deque()

container = deque()

with open('myfile.txt') as f:

for line in f:

if line != '\n':

try:

matched_tuple = re.search(r'^\((\d+),\s?(\d+),\s?(.*)\)\n$',line).groups()

except AttributeError:

pass

else:

container.append(matched_tuple)

else:

sents_with_positions.append(container)

container.clear()

答案 4 :(得分:2)

我已经阅读了许多好的答案,其中一些使用了我在阅读问题时所使用的方法。无论如何,我认为我已经添加了一些内容,所以我决定发布。

<强>抽象

我的解决方案基于single line解析方法来处理难以适应内存的文件。

线路解码由unicode-aware regex完成。它用数据解析两行,用空数解析当前部分的结尾。这使得解析过程os-agnostic尽管有特定的行分隔符(\n,\r,\r\n)。

为了确保(在处理您永远不知道的大文件时),我还在输入数据中超出空格或制表符时添加了fault-tolerance。

例如行如( 0 , 4, röck )或( 86, 87 , ))都正确解析(请参阅下面的正则表达式突破部分和输出在线demo)。

代码段 Ideone demo

import re

words = []

positions = []

pattern = re.compile(ur'^

(?:

[ \t]*[(][ \t]*

(\d+)

[ \t]*,[ \t]*

(\d+)

[ \t]*,[ \t]*

(\S+)

[ \t]*[)][ \t]*

)?

$', re.UNICODE | re.VERBOSE)

w_buffer = []

p_buffer = []

# automatically close the file handler also in case of exception

with open('file.input') as fin:

for line in fin:

for (start, end, token) in re.findall(pattern, line):

if start:

w_buffer.append(token)

p_buffer.append((int(start), int(end), token))

else:

words.append(tuple(w_buffer)); w_buffer = []

positions.append(p_buffer); p_buffer = []

if start:

words.append(tuple(w_buffer))

positions.append(p_buffer)

# An optional prettified output

import pprint as pp

pp.pprint(words)

pp.pprint(positions)

正则表达式突破 Regex101 Demo

^ # Start of the string

(?: # Start NCG1 (Non Capturing Group 1)

[ \t]* [(] [ \t]* # (1): A literal opening round bracket (i prefer over '\(')...

# ...surrounded by zero or more spaces or tabs

(\d+) # One or more digits ([0-9]+) saved in CG1 (Capturing Group 1)

#

[ \t]* , [ \t]* # (2) A literal comma ','...

# ...surrounded by zero or more spaces or tabs

(\d+) # One or more digits ([0-9]+) saved in CG2

#

[ \t]* , [ \t]* # see (2)

#

(\S+) # One or more of any non-whitespace character...

# ...(as [^\s]) saved in CG3

[ \t]* [)] [ \t]* # see (1)

)? # Close NCG1, '?' makes group optional...

# ...to match also empty lines (as '^$')

$ # End of the string (with or without newline)

答案 5 :(得分:1)

我发现在单个替换正则表达式中这是一个很好的挑战。

我得到了Q工作的第一部分,遗漏了一些边缘案例并删除了非必要的细节。

下面是我使用优秀的RegexBuddy工具的截图。

您是否需要纯正的正则表达式解决方案,或者寻找使用代码处理中间正则表达式结果的解决方案。

如果您正在寻找纯正的正则表达式解决方案,我不介意花更多的时间来满足细节。

答案 6 :(得分:0)

文本的每一行看起来都类似于元组。如果引用元组的最后组件,则它们可以是eval d。这正是我所做的,引用了最后一个组件。

from itertools import takewhile, repeat, dropwhile

from functools import partial

def quote_last(line):

line = line.split(',',2)

last = line[-1].strip()

if '"' in last:

last = last.replace('"',r'\"')

return eval('{0[0]}, {0[1]}, "{1}")'.format(line, last[:-1]))

skip_leading_empty_lines_if_any = partial(dropwhile, lambda line: not line.strip())

get_lines_between_empty_lines = partial(takewhile, lambda line: line.strip())

get_non_empty_lists = partial(takewhile, bool)

def get_tuples(lines):

#non_empty_lines = takewhile(bool, (list(lst) for lst in (takewhile(lambda s: s.strip(), dropwhile(lambda x: not bool(x.strip()), it)) for it in repeat(iter(lines)))))

list_of_non_empty_lines = get_non_empty_lists(list(lst) for lst in (get_lines_between_empty_lines(

skip_leading_empty_lines_if_any(it)) for it in repeat(iter(lines))))

return [[quote_last(line) for line in lst] for lst in list_of_non_empty_lines]

sents_with_positions = get_tuples(lines)

sents_words = [[t[-1] for t in lst] for lst in sents_with_positions]

- 我写了这段代码,但我无法理解我的错误

- 我无法从一个代码实例的列表中删除 None 值,但我可以在另一个实例中。为什么它适用于一个细分市场而不适用于另一个细分市场?

- 是否有可能使 loadstring 不可能等于打印?卢阿

- java中的random.expovariate()

- Appscript 通过会议在 Google 日历中发送电子邮件和创建活动

- 为什么我的 Onclick 箭头功能在 React 中不起作用?

- 在此代码中是否有使用“this”的替代方法?

- 在 SQL Server 和 PostgreSQL 上查询,我如何从第一个表获得第二个表的可视化

- 每千个数字得到

- 更新了城市边界 KML 文件的来源?