Zeppelin扩展了多个JAVA流程

我目前在拥有HDP 2.5的主服务器上运行最新的zeppelin源,我还有一个工作服务器。

在主服务器下,我检测到在过去12天内生成的几个JAVA进程,它们没有完成并且正在消耗内存。在某一点上,内存已满,无法在其Yarn队列下运行Zeppelin。我在Yarn中有一个队列系统,一个用于JobServer,另一个用于Zeppelin。 Zeppelin目前使用root运行,但将更改为每个自己的服务帐户。系统是CENTOS 7.2

日志显示以下过程,为了便于阅读,我开始区分它们: 过程1到3似乎是齐柏林飞艇,我不知道过程4和5是什么。 这里的问题是:是否存在配置问题?为什么zeppelin-daemon不会杀死这个JAVA进程?什么可以解决这个问题?

<p><strong>PROCESS #1</strong>

/usr/java/default/bin/java

-Dhdp.version=2.4.2.0-258

-cp /usr/hdp/2.4.2.0-258/zeppelin/local-repo/2BXMTZ239/*

:/usr/hdp/2.4.2.0-258/zeppelin/interpreter/spark/*

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-interpreter/target/lib/*

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-interpreter/target/classes/

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-interpreter/target/test-classes/

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-zengine/target/test-classes/

:/usr/hdp/2.4.2.0-258/zeppelin/interpreter/spark/zeppelin-spark_2.10-0.7.0-SNAPSHOT.jar

:/usr/hdp/current/spark-thriftserver/conf/:/usr/hdp/2.4.2.0-258/spark/lib/spark-assembly-1.6.1.2.4.2.0-258-hadoop2.7.1.2.4.2.0-258.jar

:/usr/hdp/2.4.2.0-258/spark/lib/datanucleus-api-jdo-3.2.6.jar

:/usr/hdp/2.4.2.0-258/spark/lib/datanucleus-core-3.2.10.jar

:/usr/hdp/2.4.2.0-258/spark/lib/datanucleus-rdbms-3.2.9.jar

:/etc/hadoop/conf/

-Xms1g

-Xmx1g

-Dfile.encoding=UTF-8

-Dlog4j.configuration=file:///usr/hdp/2.4.2.0-258/zeppelin/conf/log4j.properties

-Dzeppelin.log.file=/var/log/zeppelin/zeppelin-interpreter-spark-root-cool-server-name1.log org.apache.spark.deploy.SparkSubmit --conf spark.driver.extraClassPath=::/usr/hdp/2.4.2.0-258/zeppelin/local-repo/2BXMTZ239/*:/usr/hdp/2.4.2.0-258/zeppelin/interpreter/spark/*:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-interpreter/target/lib/*

:

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-interpreter/target/classes

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-interpreter/target/test-classes

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-zengine/target/test-classes

:/usr/hdp/2.4.2.0-258/zeppelin/interpreter/spark/zeppelin-spark_2.10-0.7.0-SNAPSHOT.jar

--conf spark.driver.extraJavaOptions=

-Dfile.encoding=UTF-8

-Dlog4j.configuration=file:///usr/hdp/2.4.2.0-258/zeppelin/conf/log4j.properties

-Dzeppelin.log.file=/var/log/zeppelin/zeppelin-interpreter-spark-root-cool-server-name1.log

--class org.apache.zeppelin.interpreter.remote.RemoteInterpreterServer

/usr/hdp/2.4.2.0-258/zeppelin/interpreter/spark/zeppelin-spark_2.10-0.7.0-SNAPSHOT.jar 44001

</p><p><strong>PROCESS #2 </strong>

/usr/java/default/bin/java -Dhdp.version=2.4.2.0-258

-cp /usr/hdp/2.4.2.0-258/zeppelin/local-repo/2BXMTZ239/*

:/usr/hdp/2.4.2.0-258/zeppelin/interpreter/spark/*

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-interpreter/target/lib/*

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-interpreter/target/classes/

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-interpreter/target/test-classes/

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-zengine/target/test-classes/

:/usr/hdp/2.4.2.0-258/zeppelin/interpreter/spark/zeppelin-spark_2.10-0.7.0-SNAPSHOT.jar

:/usr/hdp/current/spark-thriftserver/conf/

:/usr/hdp/2.4.2.0-258/spark/lib/spark-assembly-1.6.1.2.4.2.0-258-hadoop2.7.1.2.4.2.0-258.jar

:/usr/hdp/2.4.2.0-258/spark/lib/datanucleus-api-jdo-3.2.6.jar

:/usr/hdp/2.4.2.0-258/spark/lib/datanucleus-core-3.2.10.jar

:/usr/hdp/2.4.2.0-258/spark/lib/datanucleus-rdbms-3.2.9.jar

:/etc/hadoop/conf/

-Xms1g

-Xmx1g

-Dfile.encoding=UTF-8

-Dlog4j.configuration=file:///usr/hdp/2.4.2.0-258/zeppelin/conf/log4j.properties

-Dzeppelin.log.file=/var/log/zeppelin/zeppelin-interpreter-spark-root-cool-server-name1.log

org.apache.spark.deploy.SparkSubmit

--conf spark.driver.extraClassPath=

:

:/usr/hdp/2.4.2.0-258/zeppelin/local-repo/2BXMTZ239/*

:/usr/hdp/2.4.2.0-258/zeppelin/interpreter/spark/*

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-interpreter/target/lib/*

:

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-interpreter/target/classes

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-interpreter/target/test-classes

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-zengine/target/test-classes

:/usr/hdp/2.4.2.0-258/zeppelin/interpreter/spark/zeppelin-spark_2.10-0.7.0-SNAPSHOT.jar

--conf spark.driver.extraJavaOptions=

-Dfile.encoding=UTF-8

-Dlog4j.configuration=file:///usr/hdp/2.4.2.0-258/zeppelin/conf/log4j.properties

-Dzeppelin.log.file=/var/log/zeppelin/zeppelin-interpreter-spark-root-cool-server-name1.log

--class org.apache.zeppelin.interpreter.remote.RemoteInterpreterServer

/usr/hdp/2.4.2.0-258/zeppelin/interpreter/spark/zeppelin-spark_2.10-0.7.0-SNAPSHOT.jar

40641

</p><p><strong>PROCESS #3</strong>

/usr/java/default/bin/java

-Dhdp.version=2.4.2.0-258

-cp /usr/hdp/2.4.2.0-258/zeppelin/local-repo/2BXMTZ239/*

:/usr/hdp/2.4.2.0-258/zeppelin/interpreter/spark/*

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-interpreter/target/lib/*

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-interpreter/target/classes/

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-interpreter/target/test-classes/

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-zengine/target/test-classes

:/usr/hdp/2.4.2.0-258/zeppelin/interpreter/spark/zeppelin-spark_2.10-0.7.0-SNAPSHOT.jar

:/usr/hdp/current/spark-thriftserver/conf/

:/usr/hdp/2.4.2.0-258/spark/lib/spark-assembly-1.6.1.2.4.2.0-258-hadoop2.7.1.2.4.2.0-258.jar

:/usr/hdp/2.4.2.0-258/spark/lib/datanucleus-api-jdo-3.2.6.jar

:/usr/hdp/2.4.2.0-258/spark/lib/datanucleus-core-3.2.10.jar

:/usr/hdp/2.4.2.0-258/spark/lib/datanucleus-rdbms-3.2.9.jar

:/etc/hadoop/conf/

-Xms1g

-Xmx1g

-Dfile.encoding=UTF-8

-Dlog4j.configuration=file:///usr/hdp/2.4.2.0-258/zeppelin/conf/log4j.properties

-Dzeppelin.log.file=/var/log/zeppelin/zeppelin-interpreter-spark-root-cool-server-name1.log

org.apache.spark.deploy.SparkSubmit

--conf spark.driver.extraClassPath=::/usr/hdp/2.4.2.0-258/zeppelin/local-repo/2BXMTZ239/*

:/usr/hdp/2.4.2.0-258/zeppelin/interpreter/spark/*

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-interpreter/target/lib/*

:

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-interpreter/target/classes

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-interpreter/target/test-classes

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-zengine/target/test-classes

:/usr/hdp/2.4.2.0-258/zeppelin/interpreter/spark/zeppelin-spark_2.10-0.7.0-SNAPSHOT.jar

--conf spark.driver.extraJavaOptions=

-Dfile.encoding=UTF-8 -Dlog4j.configuration=file:///usr/hdp/2.4.2.0-258/zeppelin/conf/log4j.properties

-Dzeppelin.log.file=/var/log/zeppelin/zeppelin-interpreter-spark-root-cool-server-name1.log

--class org.apache.zeppelin.interpreter.remote.RemoteInterpreterServer /usr/hdp/2.4.2.0-258/zeppelin/interpreter/spark/zeppelin-spark_2.10-0.7.0-SNAPSHOT.jar 60887

</p><p><strong>PROCESS #4</strong>

/usr/java/default/bin/java

-Dfile.encoding=UTF-8

-Dlog4j.configuration=file:///usr/hdp/2.4.2.0-258/zeppelin/conf/log4j.properties

-Dzeppelin.log.file=/var/log/zeppelin/zeppelin-interpreter-cassandra-root-cool-server-name1.log

-Xms1024m

-Xmx1024m

-XX:MaxPermSize=512m

-cp ::/usr/hdp/2.4.2.0-258/zeppelin/interpreter/cassandra/*

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-interpreter/target/lib/*

:

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-interpreter/target/classes

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-interpreter/target/test-classes

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-zengine/target/test-classes org.apache.zeppelin.interpreter.remote.RemoteInterpreterServer

</p><p><strong>PROCESS #5</strong>

/usr/java/default/bin/java

-Dfile.encoding=UTF-8

-Dlog4j.configuration=file:///usr/hdp/2.4.2.0-258/zeppelin/conf/log4j.properties

-Dzeppelin.log.file=/var/log/zeppelin/zeppelin-interpreter-cassandra-root-cool-server-name1.log

-Xms1024m -Xmx1024m -XX:MaxPermSize=512m

-cp ::/usr/hdp/2.4.2.0-258/zeppelin/interpreter/cassandra/*

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-interpreter/target/lib/*

::/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-interpreter/target/classes

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-interpreter/target/test-classes

:/usr/hdp/2.4.2.0-258/zeppelin/zeppelin-zengine/target/test-classes org.apache.zeppelin.interpreter.remote.RemoteInterpreterServer </p>

1 个答案:

答案 0 :(得分:2)

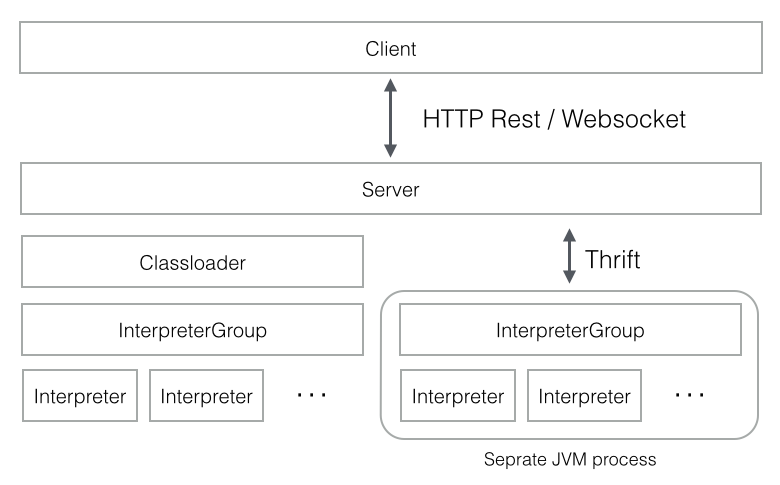

Apache Zeppelin 使用multi-process architecture,其中每个解释器作为至少一个单独的JVM进程运行,通过Apache Thrift协议与ZeppelinServer通信。

4和5看起来像Cassandra解释器进程。

您可以随时在解释器菜单中从Zeppelin UI关闭\重启它们。在Zeppelin official docs

上查看有关此内容和其他与解答相关的功能的更多信息

相关问题

最新问题

- 我写了这段代码,但我无法理解我的错误

- 我无法从一个代码实例的列表中删除 None 值,但我可以在另一个实例中。为什么它适用于一个细分市场而不适用于另一个细分市场?

- 是否有可能使 loadstring 不可能等于打印?卢阿

- java中的random.expovariate()

- Appscript 通过会议在 Google 日历中发送电子邮件和创建活动

- 为什么我的 Onclick 箭头功能在 React 中不起作用?

- 在此代码中是否有使用“this”的替代方法?

- 在 SQL Server 和 PostgreSQL 上查询,我如何从第一个表获得第二个表的可视化

- 每千个数字得到

- 更新了城市边界 KML 文件的来源?