дҪҝз”ЁpythonжӯЈеҲҷиЎЁиҫҫејҸд»Һж–Үжң¬дёӯжҸҗеҸ–жҹҗдәӣURL

жүҖд»ҘжҲ‘д»ҺNPRйЎөйқўиҺ·еҫ—дәҶHTMLпјҢжҲ‘жғідҪҝз”ЁжӯЈеҲҷиЎЁиҫҫејҸдёәжҲ‘жҸҗеҸ–жҹҗдәӣURLпјҲиҝҷдәӣURLз§°дёәеөҢеҘ—еңЁйЎөйқўдёӯзҡ„зү№е®ҡж•…дәӢзҡ„URLпјүгҖӮе®һйҷ…й“ҫжҺҘжҳҫзӨәеңЁж–Үжң¬дёӯпјҲжүӢеҠЁжЈҖзҙўпјүпјҡ

<a href="http://www.npr.org/blogs/parallels/2014/11/11/363018388/how-the-islamic-state-wages-its-propaganda-war">

<a href="http://www.npr.org/blogs/thetwo-way/2014/11/11/363309020/asked-to-stop-praying-alaska-school-won-t-host-state-tournament">

<a href="http://www.npr.org/2014/11/11/362817642/a-marines-parents-story-their-memories-that-you-should-hear">

<a href="http://www.npr.org/blogs/thetwo-way/2014/11/11/363288744/comets-rugged-landscape-makes-landing-a-roll-of-the-dice">

<a href="http://www.npr.org/blogs/thetwo-way/2014/11/11/363293514/for-dyslexics-a-font-and-a-dictionary-that-are-meant-to-help">

import nltk

import re

f = open("/Users/shannonmcgregor/Desktop/npr.txt")

npr_lines = f.readlines()

f.close()

жҲ‘жңүиҝҷдёӘд»Јз ҒжқҘжҠ“дҪҸд№Ӣй—ҙзҡ„дёҖеҲҮпјҲ

for line in npr_lines:

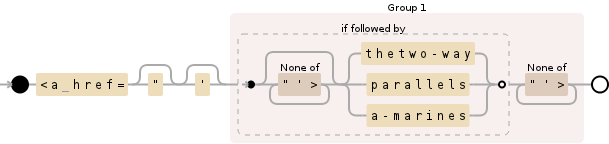

re.findall('<a href="?\'?([^"\'>]*)', line)

дҪҶиҝҷжҠ“дҪҸдәҶжүҖжңүзҪ‘еқҖгҖӮжҲ‘е°қиҜ•ж·»еҠ зұ»дјјзҡ„дёңиҘҝпјҡ

(parallels|thetwo-way|a-marines)

дҪҶжІЎжңүд»»дҪ•еӣһжҠҘгҖӮйӮЈд№ҲжҲ‘еҒҡй”ҷдәҶд»Җд№ҲпјҹжҲ‘еҰӮдҪ•е°ҶиҫғеӨ§зҡ„зҪ‘еқҖеүҘзҰ»еҷЁдёҺиҝҷдәӣе®ҡдҪҚз»ҷе®ҡзҪ‘еқҖзҡ„зү№е®ҡеӯ—иҜҚз»„еҗҲеңЁдёҖиө·пјҹ

иҜ·пјҢи°ўи°ўдҪ пјҡпјү

3 дёӘзӯ”жЎҲ:

зӯ”жЎҲ 0 :(еҫ—еҲҶпјҡ2)

йҖҡиҝҮдё“й—Ёз”ЁдәҺи§Јжһҗhtmlе’ҢxmlдёӘж–Ү件[BeautifulSoup]зҡ„е·Ҙе…·пјҢ

>>> from bs4 import BeautifulSoup

>>> s = """<a href="http://www.npr.org/blogs/parallels/2014/11/11/363018388/how-the-islamic-state-wages-its-propaganda-war">

<a href="http://www.npr.org/blogs/thetwo-way/2014/11/11/363309020/asked-to-stop-praying-alaska-school-won-t-host-state-tournament">

<a href="http://www.npr.org/2014/11/11/362817642/a-marines-parents-story-their-memories-that-you-should-hear">

<a href="http://www.npr.org/blogs/thetwo-way/2014/11/11/363288744/comets-rugged-landscape-makes-landing-a-roll-of-the-dice">

<a href="http://www.npr.org/blogs/thetwo-way/2014/11/11/363293514/for-dyslexics-a-font-and-a-dictionary-that-are-meant-to-help">"""

>>> soup = BeautifulSoup(s) # or pass the file directly into BS like >>> soup = BeautifulSoup(open('/Users/shannonmcgregor/Desktop/npr.txt'))

>>> atag = soup.find_all('a')

>>> links = [i['href'] for i in atag]

>>> import re

>>> for i in links:

if re.match(r'.*(parallels|thetwo-way|a-marines).*', i):

print(i)

http://www.npr.org/blogs/parallels/2014/11/11/363018388/how-the-islamic-state-wages-its-propaganda-war

http://www.npr.org/blogs/thetwo-way/2014/11/11/363309020/asked-to-stop-praying-alaska-school-won-t-host-state-tournament

http://www.npr.org/2014/11/11/362817642/a-marines-parents-story-their-memories-that-you-should-hear

http://www.npr.org/blogs/thetwo-way/2014/11/11/363288744/comets-rugged-landscape-makes-landing-a-roll-of-the-dice

http://www.npr.org/blogs/thetwo-way/2014/11/11/363293514/for-dyslexics-a-font-and-a-dictionary-that-are-meant-to-help

зӯ”жЎҲ 1 :(еҫ—еҲҶпјҡ0)

жӮЁеҸҜд»ҘдҪҝз”Ё lookahead пјҡ

жқҘе®ҢжҲҗжӯӨж“ҚдҪң<a href="?\'?((?=[^"\'>]*(?:thetwo\-way|parallels|a\-marines))[^"\'>]+)

зӯ”жЎҲ 2 :(еҫ—еҲҶпјҡ0)

жӮЁеҸҜд»ҘдҪҝз”Ёre.searchеҮҪж•°жқҘеҢ№й…ҚиЎҢдёӯзҡ„жӯЈеҲҷиЎЁиҫҫејҸпјҢеҰӮжһңеҢ№й…Қдёә

>>> file = open('/Users/shannonmcgregor/Desktop/npr.txt', 'r')

>>> for line in file:

... if re.search('<a href=[^>]*(parallels|thetwo-way|a-marines)', line):

... print line

е°Ҷиҫ“еҮәдёә

<a href="http://www.npr.org/blogs/parallels/2014/11/11/363018388/how-the-islamic-state-wages-its-propaganda-war">

<a href="http://www.npr.org/blogs/thetwo-way/2014/11/11/363309020/asked-to-stop-praying-alaska-school-won-t-host-state-tournament">

<a href="http://www.npr.org/2014/11/11/362817642/a-marines-parents-story-their-memories-that-you-should-hear">

<a href="http://www.npr.org/blogs/thetwo-way/2014/11/11/363288744/comets-rugged-landscape-makes-landing-a-roll-of-the-dice">

<a href="http://www.npr.org/blogs/thetwo-way/2014/11/11/363293514/for-dyslexics-a-font-and-a-dictionary-that-are-meant-to-help">

зӣёе…ій—®йўҳ

- д»ҺPHPдёӯзҡ„ж–Үжң¬дёӯжҸҗеҸ–URL

- д»Һж–Үжң¬ж–Ү件дёӯжҸҗеҸ–URL

- дҪҝз”ЁжӯЈеҲҷиЎЁиҫҫејҸд»ҺPythonдёӯзҡ„еӯ—з¬ҰдёІдёӯжҸҗеҸ–жҹҗдәӣж–Үжң¬пјҹ

- дҪҝз”ЁpythonжӯЈеҲҷиЎЁиҫҫејҸд»Һж–Үжң¬дёӯжҸҗеҸ–жҹҗдәӣURL

- дҪҝз”Ёpython regexжҸҗеҸ–е№ІеҮҖзҡ„URL

- д»Һж–Үжң¬дёӯжҸҗеҸ–еӨҚжқӮURL

- дҪҝз”ЁжӯЈеҲҷиЎЁиҫҫејҸжҸҗеҸ–жҹҗдәӣж–Үжң¬

- д»Һж–Үжң¬

- д»Һж–Үжң¬дёӯжҸҗеҸ–зү№е®ҡзҡ„URL

- дҪҝз”ЁжӯЈеҲҷиЎЁиҫҫејҸжҸҗеҸ–ж–Үжң¬иҖҢдёҚжҸҗеҸ–зҪ‘еқҖпјҹ

жңҖж–°й—®йўҳ

- жҲ‘еҶҷдәҶиҝҷж®өд»Јз ҒпјҢдҪҶжҲ‘ж— жі•зҗҶи§ЈжҲ‘зҡ„й”ҷиҜҜ

- жҲ‘ж— жі•д»ҺдёҖдёӘд»Јз Ғе®һдҫӢзҡ„еҲ—иЎЁдёӯеҲ йҷӨ None еҖјпјҢдҪҶжҲ‘еҸҜд»ҘеңЁеҸҰдёҖдёӘе®һдҫӢдёӯгҖӮдёәд»Җд№Ҳе®ғйҖӮз”ЁдәҺдёҖдёӘз»ҶеҲҶеёӮеңәиҖҢдёҚйҖӮз”ЁдәҺеҸҰдёҖдёӘз»ҶеҲҶеёӮеңәпјҹ

- жҳҜеҗҰжңүеҸҜиғҪдҪҝ loadstring дёҚеҸҜиғҪзӯүдәҺжү“еҚ°пјҹеҚўйҳҝ

- javaдёӯзҡ„random.expovariate()

- Appscript йҖҡиҝҮдјҡи®®еңЁ Google ж—ҘеҺҶдёӯеҸ‘йҖҒз”өеӯҗйӮ®д»¶е’ҢеҲӣе»әжҙ»еҠЁ

- дёәд»Җд№ҲжҲ‘зҡ„ Onclick з®ӯеӨҙеҠҹиғҪеңЁ React дёӯдёҚиө·дҪңз”Ёпјҹ

- еңЁжӯӨд»Јз ҒдёӯжҳҜеҗҰжңүдҪҝз”ЁвҖңthisвҖқзҡ„жӣҝд»Јж–№жі•пјҹ

- еңЁ SQL Server е’Ң PostgreSQL дёҠжҹҘиҜўпјҢжҲ‘еҰӮдҪ•д»Һ第дёҖдёӘиЎЁиҺ·еҫ—第дәҢдёӘиЎЁзҡ„еҸҜи§ҶеҢ–

- жҜҸеҚғдёӘж•°еӯ—еҫ—еҲ°

- жӣҙж–°дәҶеҹҺеёӮиҫ№з•Ң KML ж–Ү件зҡ„жқҘжәҗпјҹ