Kubernetesпјҡзј–з»ҮеңЁе…¶дёӯдёҖдёӘе·ҘдҪңиҠӮзӮ№дёҠйҖүжӢ©дәҶе…¬е…ұIP

жҲ‘жңүдёҖдёӘ2дёӘдё»жңҚеҠЎеҷЁе’Ң2дёӘе·ҘдәәkubernetesйӣҶзҫӨгҖӮжҜҸдёӘиҠӮзӮ№йғҪжңүиҢғеӣҙдёә192.168.5.Xзҡ„дё“з”ЁIPе’Ңе…¬з”ЁIPгҖӮ еҲӣе»әзј–з»Үе®ҲжҠӨзЁӢеәҸйӣҶд№ӢеҗҺпјҢзј–з»ҮзӘ—ж јеңЁдёҖдёӘиҠӮзӮ№дёҠйҖүжӢ©дәҶжӯЈзЎ®зҡ„еҶ…йғЁIPпјҢдҪҶеңЁеҸҰдёҖиҠӮзӮ№дёҠе®ғйҖүжӢ©дәҶе…¬е…ұIPгҖӮжңүд»Җд№Ҳж–№жі•еҸҜд»ҘжҢҮзӨәзј–з»ҮPodеңЁиҠӮзӮ№дёҠйҖүжӢ©з§ҒжңүIPпјҹ

жҲ‘жҳҜйҖҡиҝҮеңЁжң¬ең°з¬”и®°жң¬з”өи„‘дёҠзҡ„Virtual BoxдёҠеҲӣе»әзҡ„VMдёҠжүӢеҠЁе®ҢжҲҗжүҖжңүж“ҚдҪңжқҘд»ҺеӨҙејҖе§ӢеҲӣе»әзҫӨйӣҶгҖӮжҲ‘жҢҮзҡ„жҳҜд»ҘдёӢй“ҫжҺҘ

https://github.com/mmumshad/kubernetes-the-hard-way

еңЁе·ҘдҪңиҠӮзӮ№дёҠйғЁзҪІзј–з»Үе®№еҷЁд№ӢеҗҺпјҢеңЁдёҖдёӘе·ҘдҪңиҠӮзӮ№дёҠзҡ„зј–з»Үе®№еҷЁдҪҝз”ЁNAT ipпјҢеҰӮдёӢжүҖзӨәгҖӮ

10.0.2.15жҳҜNAT IPпјҢиҖҢ192.168.5.12жҳҜеҶ…йғЁIP

kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

weave-net-p4czj 2/2 Running 2 26h 192.168.5.12 worker1 <none> <none>

weave-net-pbb86 2/2 Running 8 25h 10.0.2.15 worker2 <none> <none>

[@master1 ~]$ kubectl describe node

Name: worker1

Roles: <none>

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/hostname=worker1

Annotations: node.alpha.kubernetes.io/ttl: 0

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Tue, 10 Dec 2019 02:07:09 -0500

Taints: <none>

Unschedulable: false

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

NetworkUnavailable False Wed, 11 Dec 2019 04:50:15 -0500 Wed, 11 Dec 2019 04:50:15 -0500 WeaveIsUp Weave pod has set this

MemoryPressure False Wed, 11 Dec 2019 07:13:43 -0500 Tue, 10 Dec 2019 02:09:09 -0500 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Wed, 11 Dec 2019 07:13:43 -0500 Tue, 10 Dec 2019 02:09:09 -0500 KubeletHasNoDiskPressure kubelet has no disk pressure

PIDPressure False Wed, 11 Dec 2019 07:13:43 -0500 Tue, 10 Dec 2019 02:09:09 -0500 KubeletHasSufficientPID kubelet has sufficient PID available

Ready True Wed, 11 Dec 2019 07:13:43 -0500 Tue, 10 Dec 2019 04:16:26 -0500 KubeletReady kubelet is posting ready status

Addresses:

InternalIP: 192.168.5.12

Hostname: worker1

Capacity:

cpu: 1

ephemeral-storage: 14078Mi

hugepages-2Mi: 0

memory: 499552Ki

pods: 110

Allocatable:

cpu: 1

ephemeral-storage: 13285667614

hugepages-2Mi: 0

memory: 397152Ki

pods: 110

System Info:

Machine ID: 455146bc2c2f478a859bf39ac2641d79

System UUID: D4C6F432-3C7F-4D27-A21B-D78A0D732FB6

Boot ID: 25160713-e53e-4a9f-b1f5-eec018996161

Kernel Version: 4.4.206-1.el7.elrepo.x86_64

OS Image: CentOS Linux 7 (Core)

Operating System: linux

Architecture: amd64

Container Runtime Version: docker://18.6.3

Kubelet Version: v1.13.0

Kube-Proxy Version: v1.13.0

Non-terminated Pods: (2 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits AGE

--------- ---- ------------ ---------- --------------- ------------- ---

default ng1-6677cd8f9-hws8n 0 (0%) 0 (0%) 0 (0%) 0 (0%) 26h

kube-system weave-net-p4czj 20m (2%) 0 (0%) 0 (0%) 0 (0%) 26h

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

-------- -------- ------

cpu 20m (2%) 0 (0%)

memory 0 (0%) 0 (0%)

ephemeral-storage 0 (0%) 0 (0%)

Events: <none>

Name: worker2

Roles: <none>

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/hostname=worker2

Annotations: node.alpha.kubernetes.io/ttl: 0

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Tue, 10 Dec 2019 03:14:01 -0500

Taints: <none>

Unschedulable: false

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

NetworkUnavailable False Wed, 11 Dec 2019 04:50:32 -0500 Wed, 11 Dec 2019 04:50:32 -0500 WeaveIsUp Weave pod has set this

MemoryPressure False Wed, 11 Dec 2019 07:13:43 -0500 Tue, 10 Dec 2019 03:14:03 -0500 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Wed, 11 Dec 2019 07:13:43 -0500 Tue, 10 Dec 2019 03:14:03 -0500 KubeletHasNoDiskPressure kubelet has no disk pressure

PIDPressure False Wed, 11 Dec 2019 07:13:43 -0500 Tue, 10 Dec 2019 03:14:03 -0500 KubeletHasSufficientPID kubelet has sufficient PID available

Ready True Wed, 11 Dec 2019 07:13:43 -0500 Tue, 10 Dec 2019 03:56:47 -0500 KubeletReady kubelet is posting ready status

Addresses:

InternalIP: 10.0.2.15

Hostname: worker2

Capacity:

cpu: 1

ephemeral-storage: 14078Mi

hugepages-2Mi: 0

memory: 499552Ki

pods: 110

Allocatable:

cpu: 1

ephemeral-storage: 13285667614

hugepages-2Mi: 0

memory: 397152Ki

pods: 110

System Info:

Machine ID: 455146bc2c2f478a859bf39ac2641d79

System UUID: 68F543D7-EDBF-4AF6-8354-A99D96D994EF

Boot ID: 5775abf1-97dc-411f-a5a0-67f51cc8daf3

Kernel Version: 4.4.206-1.el7.elrepo.x86_64

OS Image: CentOS Linux 7 (Core)

Operating System: linux

Architecture: amd64

Container Runtime Version: docker://18.6.3

Kubelet Version: v1.13.0

Kube-Proxy Version: v1.13.0

Non-terminated Pods: (2 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits AGE

--------- ---- ------------ ---------- --------------- ------------- ---

default ng2-569d45c6b5-ppkwg 0 (0%) 0 (0%) 0 (0%) 0 (0%) 26h

kube-system weave-net-pbb86 20m (2%) 0 (0%) 0 (0%) 0 (0%) 26h

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

-------- -------- ------

cpu 20m (2%) 0 (0%)

memory 0 (0%) 0 (0%)

ephemeral-storage 0 (0%) 0 (0%)

Events: <none>

1 дёӘзӯ”жЎҲ:

зӯ”жЎҲ 0 :(еҫ—еҲҶпјҡ0)

жҲ‘еҸҜд»ҘзңӢеҲ°жӮЁдёҚд»…еңЁpodдёӯиҖҢдё”еңЁиҠӮзӮ№дёӯйғҪжңүдёҚеҗҢзҡ„IPгҖӮ

жӯЈеҰӮжӮЁеңЁkubectl describe nodeиҫ“еҮәдёӯзңӢеҲ°зҡ„пјҢInternalIPзҡ„{вҖӢвҖӢ{1}}жҳҜworker1пјҢ192.168.5.12зҡ„жҳҜworker2гҖӮ

иҝҷдёҚжҳҜйў„жңҹзҡ„иЎҢдёәпјҢеӣ жӯӨзЎ®дҝқе°ҶдёӨдёӘVirtualBox VMйғҪйҷ„еҠ еҲ°зӣёеҗҢзҡ„йҖӮй…ҚеҷЁзұ»еһӢеҫҲйҮҚиҰҒгҖӮ

дёӨиҖ…йғҪеә”иҜҘеңЁеҗҢдёҖдёӘзҪ‘з»ңдёӯпјҢ并且еңЁжіЁйҮҠдёӯжӮЁзЎ®и®ӨжҳҜиҝҷз§Қжғ…еҶөпјҢ并且еҸҜд»Ҙи§ЈйҮҠжӯӨзҺ°иұЎгҖӮ

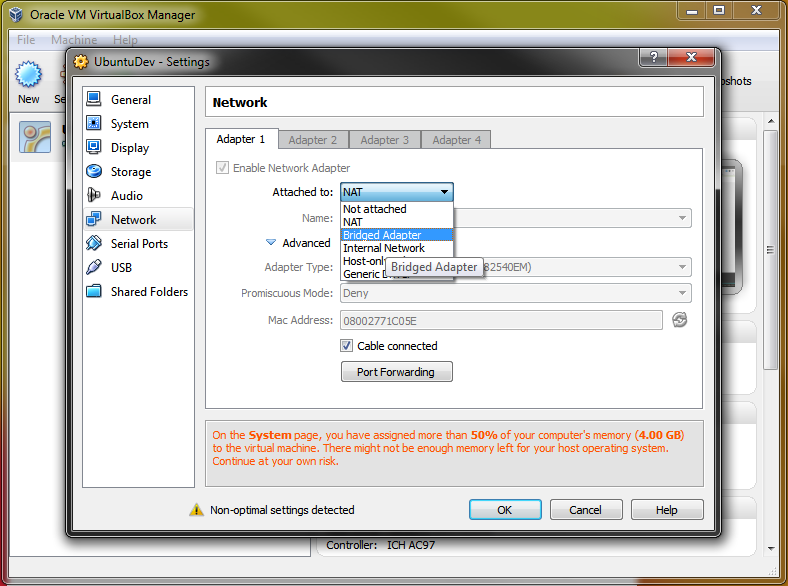

д»ҘдёӢжҳҜиҜҘй…ҚзҪ®зҡ„зӨәдҫӢпјҡ

жӯЈеҰӮжӮЁеңЁиҜ„и®әдёӯжҸҗеҲ°зҡ„йӮЈж ·пјҢ第дёҖдёӘиҠӮзӮ№жҳҜжүӢеҠЁж·»еҠ зҡ„пјҢ第дәҢдёӘиҠӮзӮ№жҳҜеңЁTLSеј•еҜјжңҹй—ҙж·»еҠ зҡ„пјҢеҚідҪҝIPең°еқҖвҖңй”ҷиҜҜвҖқд№ҹж·»еҠ дәҶе®ғгҖӮ

иҰҒи§ЈеҶіжӯӨй—®йўҳпјҢжӮЁжңҖеҘҪзҡ„еҠһжі•жҳҜдҪҝз”ЁVirtual BoxдёҠжүҖжңүиҠӮзӮ№зҡ„зӣёеҗҢйҖӮй…ҚеҷЁи®ҫзҪ®еҶҚж¬Ўд»ҺеӨҙеј•еҜјзҫӨйӣҶгҖӮ

- Kubernetesе·ҘдҪңиҠӮзӮ№еә”иҜҘжңүе…¬е…ұIPеҗ—пјҹ

- Kubernetesе·ҘдәәиҠӮзӮ№е…¬е…ұIPжҳҜеҗҰжӣҙж”№пјҹ

- eksе·ҘдәәиҠӮзӮ№е…¬е…ұipеҲҶй…Қ

- еӨ§йҮҸе·ҘдҪңиҠӮзӮ№v /е°‘ж•°е…·жңүжӣҙеӨҡиө„жәҗзҡ„е·ҘдҪңиҠӮзӮ№

- Kubernetes-жӣҙж”№е·ҘдҪңзЁӢеәҸиҠӮзӮ№зҡ„еҶ…йғЁIP

- coreosдёҠеҚ•еҸ°и®Ўз®—жңәkubernetesдёҠиҠӮзӮ№зҡ„е…¬е…ұе’Ңз§ҒжңүIPең°еқҖ

- ж— жі•еңЁе·ҘдҪңиҠӮзӮ№дёҠиҺ·еҸ–kubectlж—Ҙеҝ—

- дёәе·ҘдҪңиҠӮзӮ№и®ҫзҪ®дёҚеҗҢзҡ„еҶ…йғЁIP

- Kubernetesпјҡзј–з»ҮеңЁе…¶дёӯдёҖдёӘе·ҘдҪңиҠӮзӮ№дёҠйҖүжӢ©дәҶе…¬е…ұIP

- Kubernetes Service LoadBalancerвҖң EXTERNAL-IPвҖқдҝқжҢҒдёәвҖң <none>вҖқпјҢиҖҢдёҚжҳҜдҪҝз”Ёе·ҘдҪңиҠӮзӮ№зҡ„е…¬е…ұIPең°еқҖ

- жҲ‘еҶҷдәҶиҝҷж®өд»Јз ҒпјҢдҪҶжҲ‘ж— жі•зҗҶи§ЈжҲ‘зҡ„й”ҷиҜҜ

- жҲ‘ж— жі•д»ҺдёҖдёӘд»Јз Ғе®һдҫӢзҡ„еҲ—иЎЁдёӯеҲ йҷӨ None еҖјпјҢдҪҶжҲ‘еҸҜд»ҘеңЁеҸҰдёҖдёӘе®һдҫӢдёӯгҖӮдёәд»Җд№Ҳе®ғйҖӮз”ЁдәҺдёҖдёӘз»ҶеҲҶеёӮеңәиҖҢдёҚйҖӮз”ЁдәҺеҸҰдёҖдёӘз»ҶеҲҶеёӮеңәпјҹ

- жҳҜеҗҰжңүеҸҜиғҪдҪҝ loadstring дёҚеҸҜиғҪзӯүдәҺжү“еҚ°пјҹеҚўйҳҝ

- javaдёӯзҡ„random.expovariate()

- Appscript йҖҡиҝҮдјҡи®®еңЁ Google ж—ҘеҺҶдёӯеҸ‘йҖҒз”өеӯҗйӮ®д»¶е’ҢеҲӣе»әжҙ»еҠЁ

- дёәд»Җд№ҲжҲ‘зҡ„ Onclick з®ӯеӨҙеҠҹиғҪеңЁ React дёӯдёҚиө·дҪңз”Ёпјҹ

- еңЁжӯӨд»Јз ҒдёӯжҳҜеҗҰжңүдҪҝз”ЁвҖңthisвҖқзҡ„жӣҝд»Јж–№жі•пјҹ

- еңЁ SQL Server е’Ң PostgreSQL дёҠжҹҘиҜўпјҢжҲ‘еҰӮдҪ•д»Һ第дёҖдёӘиЎЁиҺ·еҫ—第дәҢдёӘиЎЁзҡ„еҸҜи§ҶеҢ–

- жҜҸеҚғдёӘж•°еӯ—еҫ—еҲ°

- жӣҙж–°дәҶеҹҺеёӮиҫ№з•Ң KML ж–Ү件зҡ„жқҘжәҗпјҹ